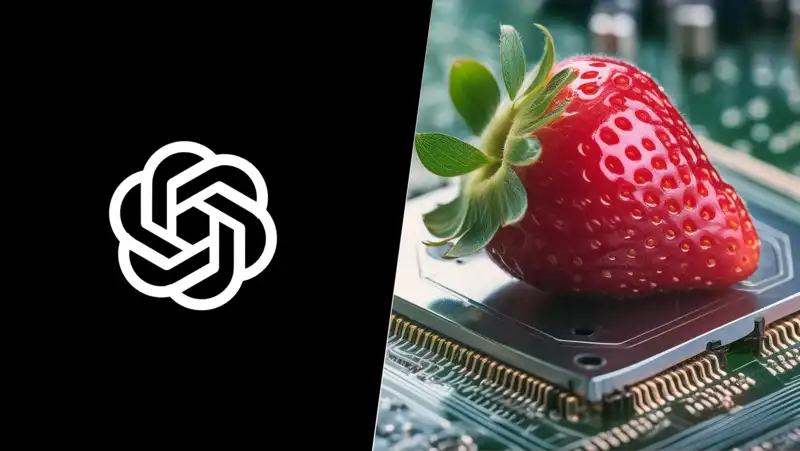

OpenAI has released o1-mini, the smallest version of its new inference family of artificial intelligence models, for free on ChatGPT It can be used without registering an account

Unlike other AI models such as GPT-4 or Anthropic's Claude, o1 can receive prompts and solve problems incrementally before responding with a final output This greatly reduces error rates and “hallucinations” and allows for longer, more well thought out responses o1 is able to respond to the prompts in a more thoughtful manner o1 is able to respond to the prompts in a more thoughtful manner

There are some limitations to using the o1 model family It takes quite a long time to get a response, does not have multimodal capabilities, and cannot give documents or images It only works with text

The name “mini” is also somewhat misleading: it is a much smaller model than o1-preview, but it is trained on a more modern architecture and has improved performance in some aspects

Like the larger GPT-4o, the o1-mini is available to free users, but has rate limits that quickly run out of rate In fact, these rate limits apply to paid members as well, and paid members can use it several more times per week

When OpenAI first announced the o1 family of models last week, they said that users would only receive 30 messages each week to test This is because the model is quite expensive to run, as it repeats multiple prompts over and over until it arrives at a response that it thinks is correct

Unlike other models, it can generate fairly large outputs, such as entire codebases or multi-page reports

OpenAI imposes a limit on how often it can be used They subsequently increased the limit significantly beyond the initial 30 times per week because of demand and because people are still trying to figure out exactly what it is useful for

First, OpenAI completely refreshed the limits for everyone Then they announced that o1-preview would increase it from 30 to 50 messages per week, and o1-mini would increase it to over 200 (for paid members)

If you are approaching one of the models in the o1 family for the first time, you need to take a slightly different approach than you would with GPT-4o or Gemini

With these models, you tend to treat it almost like a conversation with Google: ask questions, add some details, and hope for the best However, the o1 family of models needs to be more specific in order to reason through their responses

In one test, I was rather vague and tasked with creating an “iOS gamified task manager app”; asking ChatGPT that question would have yielded all the code to run the app, at least at a very basic level However, o1-mini gave us a step-by-step design document for producing the app and an overview of the structure of the code base

Therefore, if you decide to use o1-mini to create your app, you need to make sure that you are giving specific and explicit instructions to provide the code needed to develop your app And that level of specificity will carry through to all use cases

Comments