The AI model DenseAV learns the meaning of words and the position of sounds without human input or text, just by watching videos, the researchers said In the paper, researchers at MIT, Microsoft, Oxford and Google explain that DenseAV can do this using only self-supervision from video

To learn these patterns, it uses audio-video-controlled learning to associate certain sounds with the observable world This learning mode means that the visual aspect of the model is not able to gain insights from the audio side (and vice versa)

Learn by comparing pairs of audio and visual signals to determine which data is important This is how denseav can learn without labels, because it's easier to understand the language and predict what you're seeing from what you're hearing when you can recognize sounds

The idea of this process was shocked when Mark Hamilton, a PhD student at MIT, watched the film "The March of the Penguins" There are certain scenes where penguins fall and moan

"It's almost obvious that this moan stands for a 4-letter word when you see it This was the moment I thought I might need to use audio and video to learn the language," Hamilton said in an MIT news release

His aim was to make the model learn the language by predicting what it sees from what it hears and vice versa So if you hear someone say, "Grab that violin and start playing," it's likely that you'll meet a violin or a musician This game of matching audio and video was repeated in different videos

Once this was done, the researchers focused on the pixels the model was looking at when it heard a certain sound — a person saying "cat" triggers the algorithm to start looking for a cat in the video Seeing which pixels the algorithm chooses means that it can discover what it thinks a particular word means

But let's say DenseAV heard someone say "cat" and later heard the cat meow, the AI may still identify the cat's image in the shot But does that mean the algorithm thinks the cat is the same thing as the cat's meow?

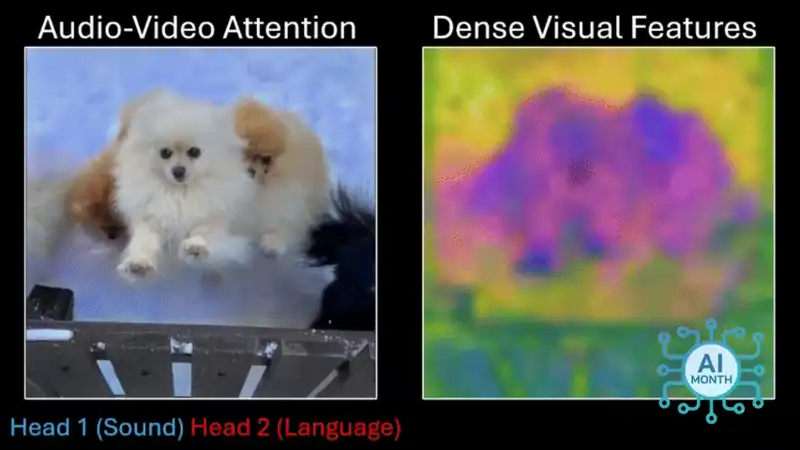

The researchers explored this by giving DenseAV a "double-sided brain" and found that one side of the brain naturally focused on language and the other focused on meow-like sounds So DenseA actually learned the different meanings of both words without human intervention

The already huge amount of video content means that ai can be trained in something like educational video

"Another exciting application is to understand new languages like dolphin and whale communication, which do not have written forms of communication," Hamilton said

The next step for the team is to create a system that can learn from video or audio-only data

Comments